A comparison of client-server and P2P file distribution delays

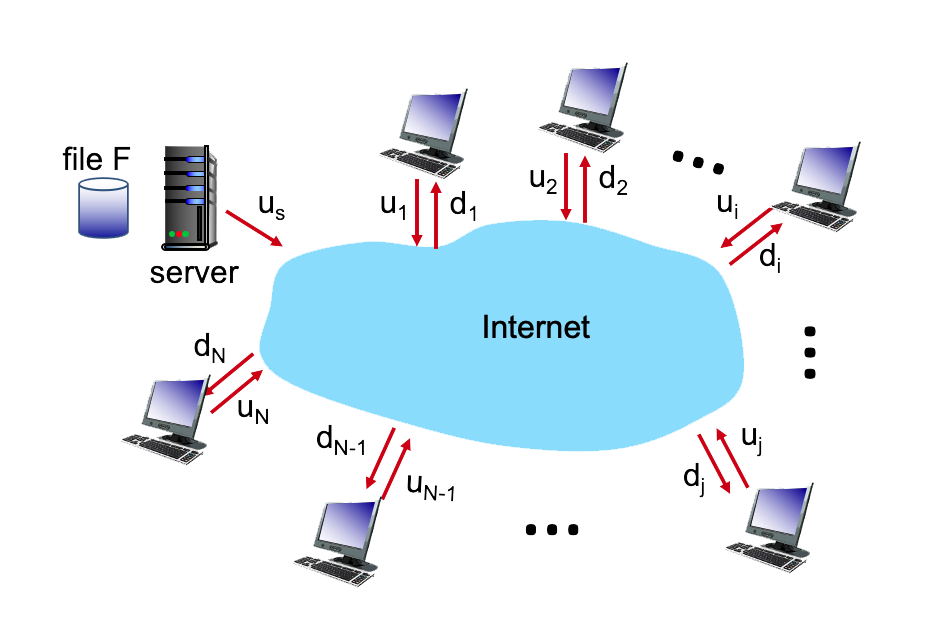

In this problem, you'll compare the time needed to distribute a file that is initially located at a server to clients via either client-server download or peer-to-peer download. Before beginning, you might want to first review Section 2.5 and the discussion surrounding Figure 2.22 in the text.

The problem is to distribute a file of size F = 3 Gbits to each of these 9 peers. Suppose the server has an upload rate of u = 67 Mbps.

The 9 peers have upload rates of: u1 = 26 Mbps, u2 = 23 Mbps, u3 = 27 Mbps, u4 = 17 Mbps, u5 = 23 Mbps, u6 = 19 Mbps, u7 = 24 Mbps, u8 = 28 Mbps, and u9 = 19 Mbps

The 9 peers have download rates of: d1 = 11 Mbps, d2 = 30 Mbps, d3 = 13 Mbps, d4 = 30 Mbps, d5 = 17 Mbps, d6 = 35 Mbps, d7 = 34 Mbps, d8 = 13 Mbps, and d9 = 27 Mbps

Question List

1. What is the minimum time needed to distribute this file from the central server to the 9 peers using the client-server model?

2. For the previous question, what is the root cause of this specific minimum time? Answer as 's' or 'ci' where 'i' is the client's number

3. What is the minimum time needed to distribute this file using peer-to-peer download?

4. For question 3, what is the root case of this specific minimum time: the server (s), client (c), or the combined upload of the clients and the server (cu)

Solution

1. The minimum time needed to distribute the file = max of: N*F / US and F / dmin = 402.99 seconds.

2. The root cause of the minimum time was s.

3. The minimum time needed to distribute the file = max of: F / US, F / dmin, and N * F / sum of ui for all i + uS = 272.73 seconds.

4. The root cause of the minimum time was c.

That's incorrect

That's correct

The answer was: 402.99

The answer was: s

The answer was: 272.73

The answer was: c